This PR adds

https://github.com/desktop/desktop/pull/5154--recurse-submodulesto the options ofgit checkout,pullandfetch, so that submodules are correctly updated when switching branches.

This makes submodules “just work” a little better. Without this, the user needs to update submodules manually, which is not possible using GitHub Desktop.

istiod vs istio pilot

Istio’s control plane is, itself, a modern, cloud-native application. Thus, it was built from the start as a set of microservices. Individual Istio components like service discovery (Pilot), configuration (Galley), certificate generation (Citadel) and extensibility (Mixer) were all written and deployed as separate microservices. The need for these components to communicate securely and be observable, provided opportunities for Istio to eat its own dogfood (or “drink its own champagne”, to use a more French version of the metaphor!).

[…] in Istio 1.5, we’ve changed how Istio is packaged, consolidating the control plane functionality into a single binary called istiod.

https://istio.io/latest/blog/2020/istiod/

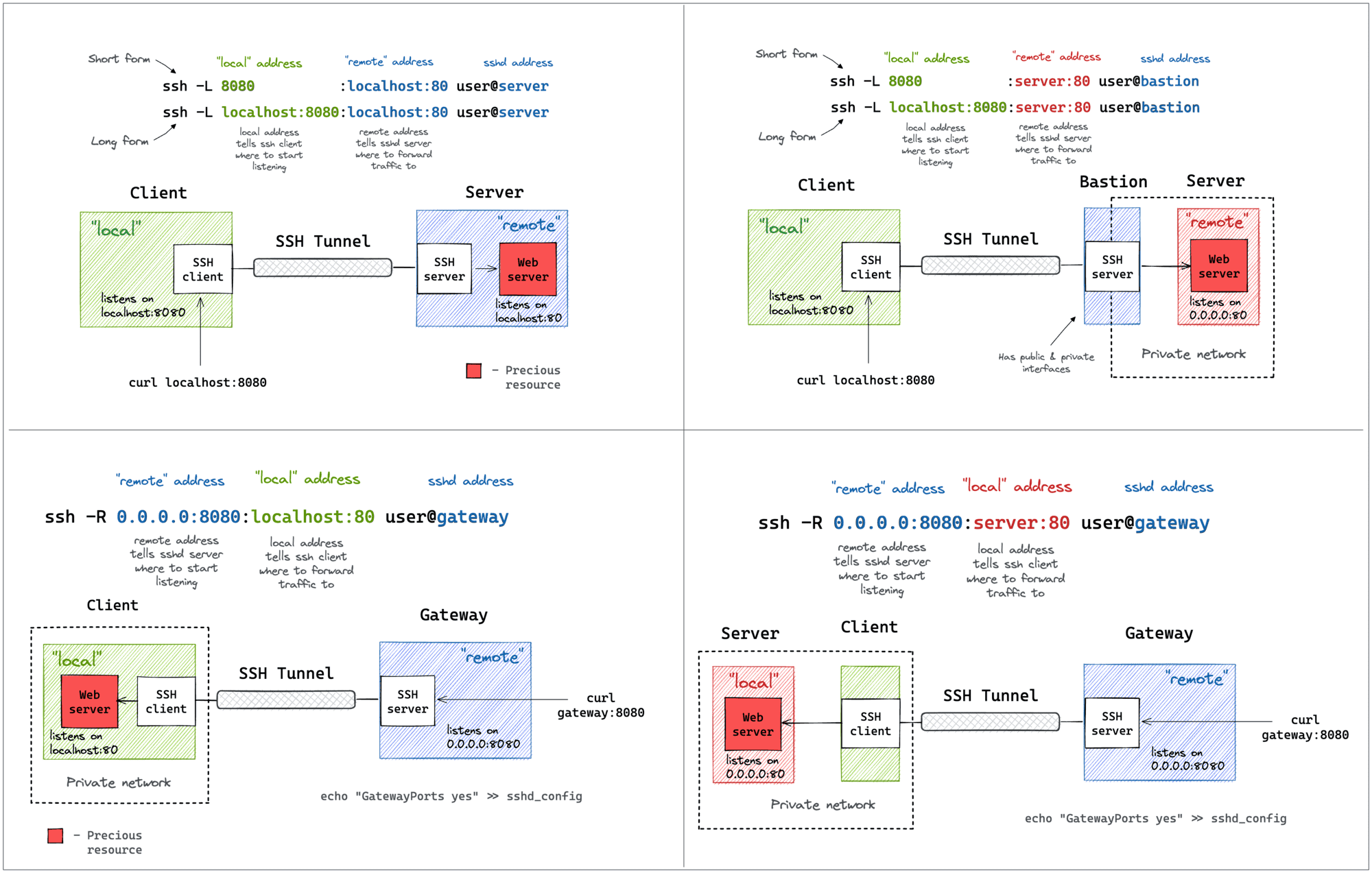

ssh tunnels

kubectl debug

Sometimes, it might be a good idea to copy a Pod before starting the debugging. Luckily, the

https://iximiuz.com/en/posts/kubernetes-ephemeral-containers/kubectl debugcommand has a flag for that--copy-to <new-name>. The new Pod won’t be owned by the original workload, nor will it inherit the labels of the original Pod, so it won’t be targeted by a potential Service object in front of the workload. This should give you a quiet copy to investigate!

kubectl debug -it -c debugger --image=busybox \

--copy-to test-pod \

--share-processes \

${POD_NAME}Rebuilding my desktop setup

In my day to day activity I need to run locally some containers for development and operation purposes. Till now, I used docker running on my desktop, a 2020 MacBook Pro, 16GB RAM.

Lately, I had to set up tight docker desktop resource limits to 4GB RAM, 2CPU if I wanted that responsiveness of other apps in my desktop would not be affected by locally running containers. Even doing that, laptop fans do lot of noise and things became a bit bumpy, and some container jobs took extra time to complete on only 2 CPUs…

Additionally, you know MacBook Pro x86 is a dead product but for some of us, moving to ARM has some implications both in the way we build and test containers for production x86 cloud infra, so I would prefer to stick to x86 platform dockers. What should I do when I need to renew my laptop?

Moving to a different OS for the desktop is not an option for me at this moment. Going back to Windows to end up running everything on WSL or moving to Linux Desktop and use Office 365 on browser seem too annoying for me.

So my requirements are:

- Stay on MacOS if possible.

- Run docker containers on x86, multiplatform containers not a valid option for me.

- Avoid resource starvation on desktop apps.

So I decided to split up my environment to stay on my current MacBook Pro but use an extra Intel NUC device as my development docker runtime (without any VM layer). That would let me use all my laptop resources for local apps as VSCode, Browser, Office365, etc. to run smoothly.

For my tests, I used NUC7CJYSAL device, with 8GB RAM and 512 SATA SSD. It has 2 a Core Celeron CPU, with no HT support (only 2 system threads). I installed Ubuntu LTS on it and configured certificates to access by ssh without password.

Using VSCode remote option, I can run the IDE on my laptop but with ‘filesystem’ of the NUC. VSCode terminal is NUC terminal so any docker command is run agains NUC docker runtime. Of course, you need your repos downloaded locally on the NUC, but using ssh agent option you can ‘export’ your ssh certs over ssh so your github certificate is magically available for you on the NUC.

But, would this set up mean I need to carry both laptop and NUC everywhere I go?. That is the magic part. I’m currently a Tailscale personal VPN service user, so I added my NUC as device on may Tailscale account. Now, from my laptop I can access my NUC VPN IP anywhere with internet access and I can keep the NUC running at home. An extra benefit is that you can keep things running on NUC and be able to disconnect your laptop (eg. running build or deployment jobs while your daily conmute).

If you add an /etc/host alias in your laptop, combined with cert based ssh authentication, you can easily connect form VSCode or iTerm. You can even share folders on the NUC and connect to them from desktop in case it is needed.

With this configuration, I can evolve both desktop and NUC independently, so in the future I can switch to ARM laptop or a different OS and also focus the laptop resources to run UI based apps (VSCode, Browser, office) without resource constrictions from the development runtime NUC.

I’m currently using this set up and decided to stay with it but using a more powerful i7/16G NUC device. Hope this post brought you any ideas!!

Container port…

[…] containerPort does not set the port that will be exposed, it is purely informational and had no effect on Kubernetes networking. It is however good practice to document the port that the container is listening on for the next poor soul that is trying to figure out your system.

https://stackoverflow.com/questions/55741170/container-port-pods-vs-container-port-service

The EXPOSE instruction informs Docker that the container listens on the specified network ports at runtime. It does not make the ports of the container accessible to the host. To do that, you must use the

https://stackoverflow.com/questions/35548843/does-ports-on-docker-compose-yml-have-the-same-effect-as-expose-on-dockerfile-pflag to publish a range of ports.

Mutable types as function parameter default value

… each default value is evaluated when the function is defined—i.e., usually when the module is loaded—and the default values become attributes of the function object. So if a default value is a mutable object, and you change it, the change will affect every future call of the function.

Fluent Python, 2nd Edition, Luciano Ramalho

Node.js event loop

The JavaScript event loop takes the first call in the callback queue and adds it to the call stack as soon as it’s empty.

JavaScript code is being run in a run-to-completion manner, meaning that if the call stack is currently executing some code, the event loop is blocked and won’t add any calls from the queue until the stack is empty again.

https://felixgerschau.com/javascript-event-loop-call-stack/

DNS CAA

CAA is a security standard that was approved in 2017 and which allows domain owners to prevent Certificate Authorities (CAs; organizations that issue TLS certificates) to issue certificates for their domains.

Domain owners can add a “CAA field” to their domain’s DNS records, and only the CA listed in the CAA field can issue a TLS certificate for that domain.

All Certificate Authorities — like Let’s Encrypt — must follow the CAA specification by the letter of the law or face steep penalties from browser makers.

https://www.zdnet.com/article/lets-encrypt-to-revoke-3-million-certificates-on-march-4-due-to-bug/

AGIC and let’s encrypt

AGIC helps eliminate the need to have another load balancer/public IP in front of the AKS cluster and avoids multiple hops in your datapath before requests reach the AKS cluster. Application Gateway talks to pods using their private IP directly and does not require NodePort or KubeProxy services. This also brings better performance to your deployments.

https://docs.microsoft.com/en-us/azure/application-gateway/ingress-controller-overview

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: aspnetapp

annotations:

kubernetes.io/ingress.class: azure/application-gateway

spec:

rules:

- http:

paths:

- path: /

backend:

serviceName: aspnetapp

servicePort: 80

ingress-shim watches

https://cert-manager.io/docs/usage/ingress/Ingressresources across your cluster. If it observes anIngresswith annotations described in the Supported Annotations section, it will ensure aCertificateresource with the name provided in thetls.secretNamefield and configured as described on theIngressexists.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

# add an annotation indicating the issuer to use.

cert-manager.io/cluster-issuer: nameOfClusterIssuer

name: myIngress

namespace: myIngress

spec:

rules:

- host: example.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: myservice

port:

number: 80

tls: # < placing a host in the TLS config will determine what ends up in the cert's subjectAltNames

- hosts:

- example.com

secretName: myingress-cert # < cert-manager will store the created certificate in this secret.

The ACME Issuer type represents a single account registered with the Automated Certificate Management Environment (ACME) Certificate Authority server. When you create a new ACME

https://cert-manager.io/docs/configuration/acme/#solving-challengesIssuer, cert-manager will generate a private key which is used to identify you with the ACME server.

SSL is handled by the ingress controller, not the ingress resource. Meaning, when you add TLS certificates to the ingress resource as a kubernetes secret, the ingress controller access it and makes it part of its configuration.

https://devopscube.com/configure-ingress-tls-kubernetes/

apiVersion: v1

kind: Secret

metadata:

name: hello-app-tls

namespace: dev

type: kubernetes.io/tls

data:

server.crt: |

<crt contents here>

server.key: |

<private key contents here>

You can secure an Ingress by specifying a Secret that contains a TLS private key and certificate. The Ingress resource only supports a single TLS port, 443, and assumes TLS termination at the ingress point (traffic to the Service and its Pods is in plaintext).

Referencing this secret in an Ingress tells the Ingress controller to secure the channel from the client to the load balancer using TLS. You need to make sure the TLS secret you created came from a certificate that contains a Common Name (CN), also known as a Fully Qualified Domain Name (FQDN) for

https://kubernetes.io/docs/concepts/services-networking/ingress/https-example.foo.com.

I found this error when installing helm chart and creating Issuer:

custom resource (ClusterIssuer) takes some time to properly register in API server. In case when CRD ClusterIssuer was just created and then immediately one tries to create custom resource — this error will happen.

https://github.com/hashicorp/terraform-provider-kubernetes-alpha/issues/72

so I have to split cert-manager chart installation from Cluster Issuer creation.